AI Studio

Mistral AI Studio is a platform where you can access and manage models, usage, APIs, organizations, workspaces, and a variety of other features.

We offer flexible access to our models through a range of options, services, and customizable solutions-including playgrounds, fine-tuning, self-hosting and more-to meet your specific needs.

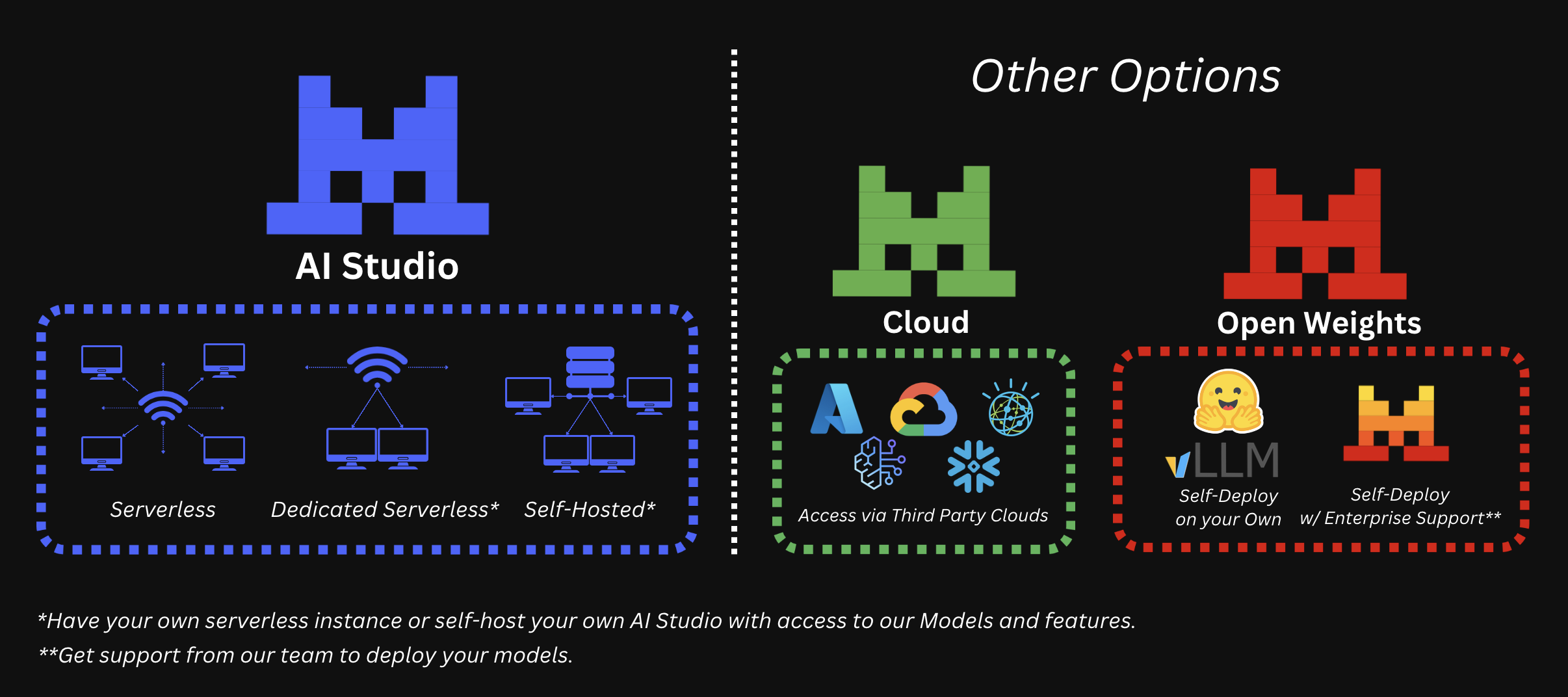

AI Studio (previously "La Plateforme") provides API endpoints for pay-as-you-go access to our latest models. It allows you to manage workspaces, usage, and a variety of other features—a full stack with multiple options for diverse enterprise use. We offer three main solutions:

- Serverless: Public API endpoints managed by the Mistral team through AI Studio.

- Dedicated Serverless: Dedicated instances tailored to your enterprise needs through a dedicated AI Studio. Reach out to us if you're interested!

- Self-Hosted: Dedicated on-premise instances for your enterprise needs through a self-hosted AI Studio. Reach out to us if you're interested!

Below, you can find a non-exhaustive table with further details regarding each offering.

| Feature | AI Studio - Serverless | AI Studio - Serverless Dedicated | AI Studio - Self-Hosted (VPC/on-prem) |

|---|---|---|---|

| Infrastructure | Owned & Managed by Mistral | Owned & Managed by Mistral | Owned & Managed by Client |

| Data Access & Storage | Shared data plane, multi-tenant storage | Virtually isolated per Client | Hosted by Client |

| Network | Public Internet | Private Subnet | Hosted by Client |

| Inference Compute | Shared | Shared - With possibility for dedicated GPU options | Hosted by Client |

Some features may be model-dependent and vary, contact our team for further details.

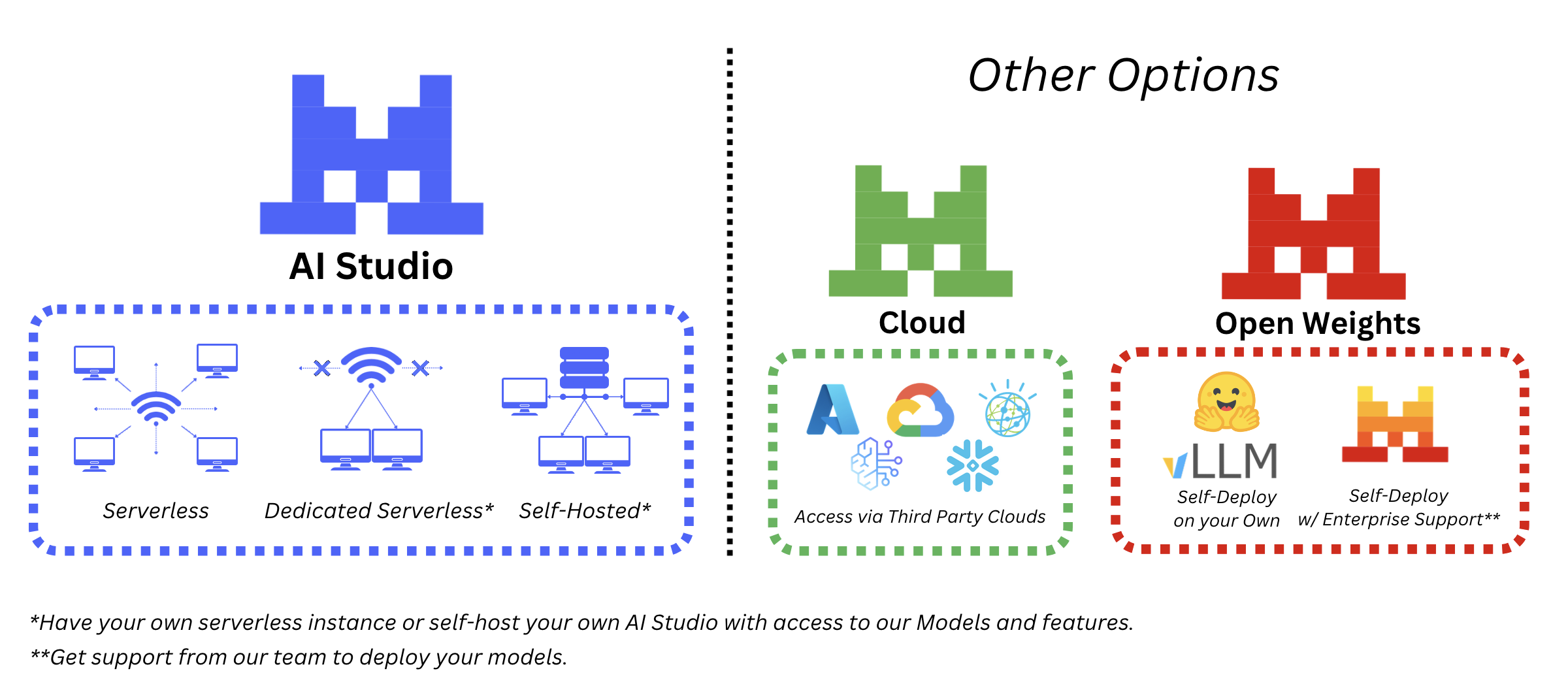

Other Options

There are also other ways of accessing and using our models for your needs:

- Third Party Cloud: You can access Mistral AI models via your preferred cloud platforms.

- Open Weights: You can self-deploy our models on your own on-premise infrastructure.

- Self-Deploy on your Own: You can self-deploy our open models on your own. Multiple open-weights models are available under the Apache 2.0 License, you can find them on Hugging Face.

- Self-Deploy with Enterprise Support: You can also self-deploy our models, both open and frontier, with enterprise support. Reach out to us here if you’re interested!

API Access with AI Studio - Serverless

You will need to activate payments on your account to enable your API keys in the AI Studio. Check out the Quickstart guide to get started with your first Mistral API request.

Explore diverse capabilities of our models:

- Completion

- Embeddings

- Function calling

- JSON mode

- Guardrailing

- Much more...

Cloud-based deployments

For a comprehensive list of options to deploy and consume Mistral AI models on the cloud, head on to the cloud deployment section.

Raw model weights

Raw model weights can be used in several ways:

- For self-deployment, on cloud or on premise, using either TensorRT-LLM or vLLM, head on to Deployment

- For research, head-on to our reference implementation repository,

- For local deployment on consumer grade hardware, check out the llama.cpp project or Ollama.