Reasoning

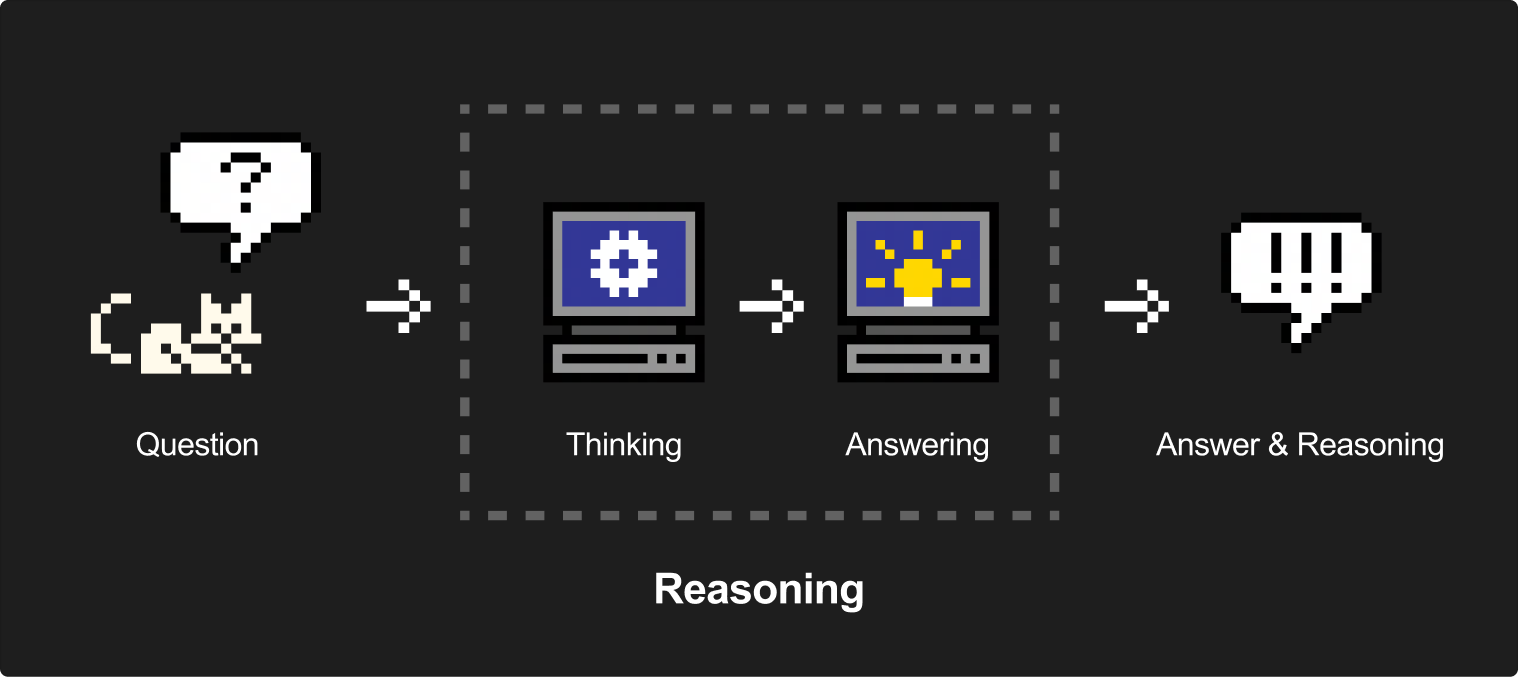

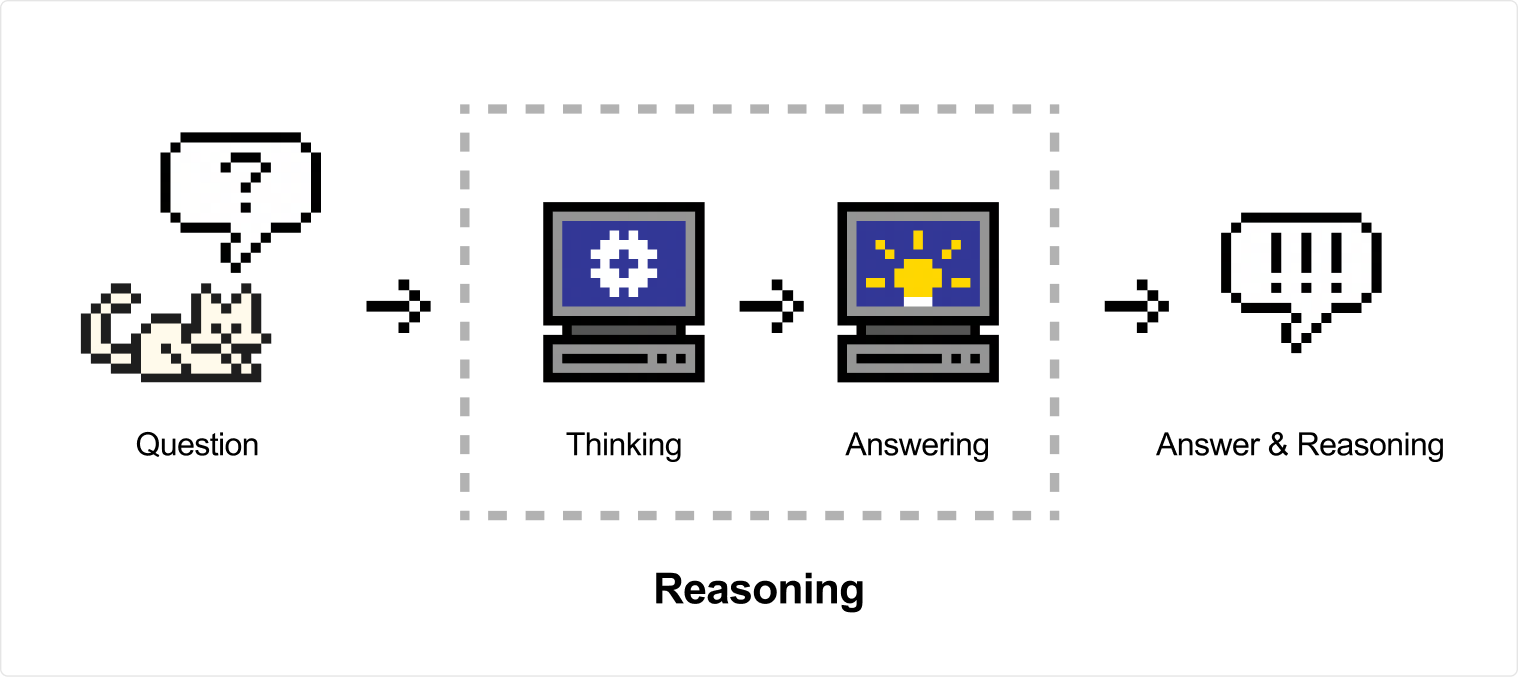

Reasoning is the next step of CoT (Chain of Thought), naturally used to describe the logical steps generated by the model before reaching a conclusion. Reasoning strengthens this characteristic by going through training steps that encourage the model to generate chains of thought freely before producing the final answer. This allows models to explore the problem more profoundly and ultimately reach a better solution to the best of their ability by using extra compute time to generate more tokens and improve the answer—also described as Test Time Computation.

They excel at complex use cases like math and coding tasks, but can be used in a wide range of scenarios to solve diverse problems. The output of reasoning models will hence be split into 2 sections, the reasoning chunks, where you can find the reasoning traces the model generated, and the final answer outside of the thinking chunks.

Before continuing, we recommend reading the Chat Competions documentation to learn more about the chat completions API and how to use it before proceeding.

Before You Start

Before You Start

Reasoning Models

Currently we have two reasoning models:

magistral-small-latest: Our open smaller version for open research and efficient reasoning.

magistral-medium-latest: Our more powerful reasoning model balancing performance and cost.

Currently, -latest points to -2509, our most recent version of our reasoning models. If you were previously using -2506, a migration regarding the thinking chunks is required.

-

-2509&-2507(new): Uses tokenized thinking chunks via control tokens, providing the thinking traces in different types of content chunks. -

-2506(old): Used<think>\nand\n</think>\ntags as strings to encapsulate the thinking traces for input and output within the same content type.

¡Meow! Click one of the tabs above to learn more.

Usage

Usage

How to use Reasoning Models

To use our reasoning models, you will be using a similar method to more classic llms, however reasoning models will generate more tokens for the reasoning step, and often require a specific system prompt for optimal performance.

System Prompt

System Prompt

To have the best performance out of our models, we recommend having the following system prompt (currently default):

{

"role": "system",

"content": [

{

"type": "text",

"text": "# HOW YOU SHOULD THINK AND ANSWER\n\nFirst draft your thinking process (inner monologue) until you arrive at a response. Format your response using Markdown, and use LaTeX for any mathematical equations. Write both your thoughts and the response in the same language as the input.\n\nYour thinking process must follow the template below:"

},

{

"type": "thinking",

"thinking": [

{

"type": "text",

"text": "Your thoughts or/and draft, like working through an exercise on scratch paper. Be as casual and as long as you want until you are confident to generate the response to the user."

}

]

},

{

"type": "text",

"text": "Here, provide a self-contained response."

}

]

}You can also opt out of the default system prompt by setting prompt_mode to null in the API. The prompt_mode has two possible values:

reasoning: the default behavior where the default system prompt will be used explicitly.null: no system prompt will be used whatsoever.

Providing your own system prompt will override the default system prompt with the new one.

Reasoning with Chat Completions

Reasoning with Chat Completions

You can use our reasoning models in a similar way to how you would use our other text models, here is an example via our chat completions endpoint:

import os

from mistralai import Mistral

api_key = os.environ["MISTRAL_API_KEY"]

model = "magistral-medium-latest"

client = Mistral(api_key=api_key)

chat_response = client.chat.complete(

model = model,

messages = [

{

"role": "user",

"content": "John is one of 4 children. The first sister is 4 years old. Next year, the second sister will be twice as old as the first sister. The third sister is two years older than the second sister. The third sister is half the age of her older brother. How old is John?",

},

],

# prompt_mode = "reasoning" if you want to explicitly use the default system prompt, or None if you want to opt out of the default system prompt.

)Full Reasoning Output

Full Reasoning Output

Below we provide the full output of the model so you can see the reasoning traces and the final answer in details.

The output of the model will include different chunks of content, but mostly a thinking type with the reasoning traces and a text type with the answer like so:

"content": [

{

"type": "thinking",

"thinking": [

{

"type": "text",

"text": "*Thoughts and reasoning traces will go here.*"

}

]

},

{

"type": "text",

"text": "*Final answer will go here.*"

},

...

]¡Meow! Click one of the tabs above to learn more.