La Plateforme

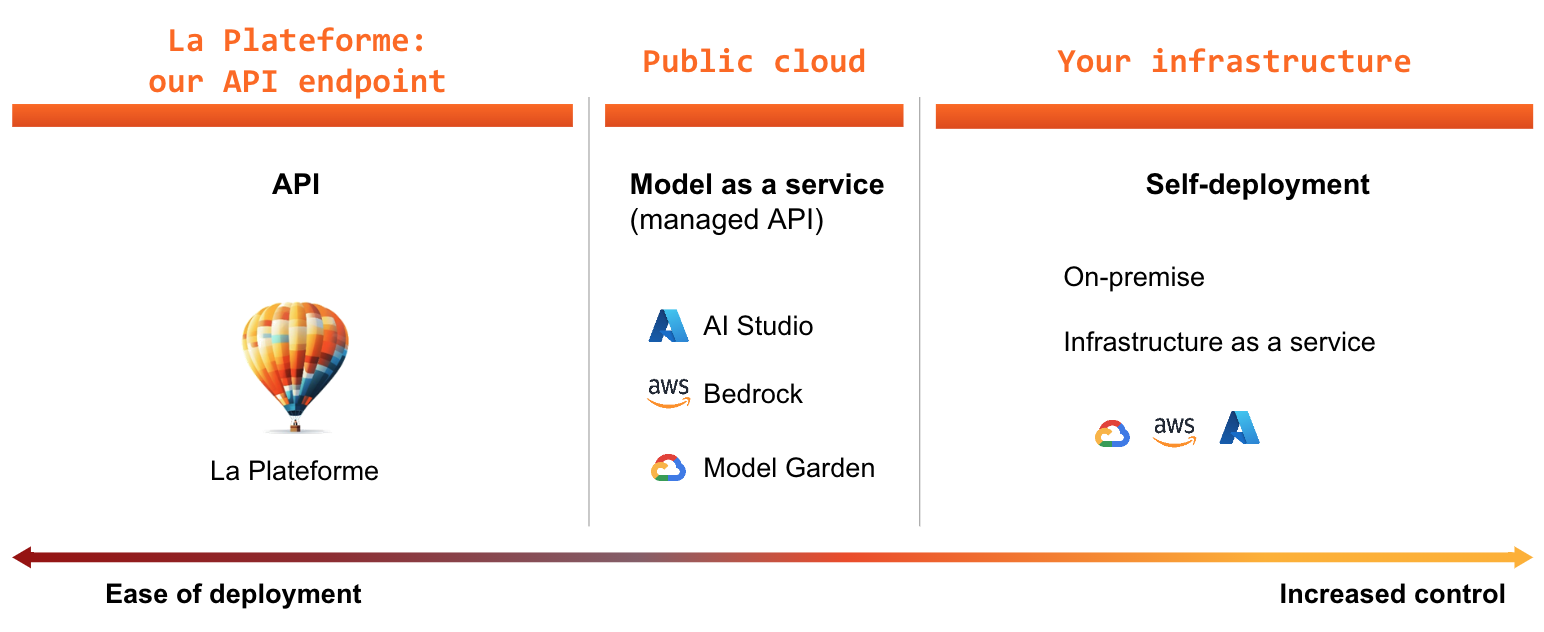

Mistral AI currently provides three types of access to Large Language Models:

- La Plateforme: We provide API endpoints through La Plateforme providing pay-as-you-go access to our latest models.

- Cloud: You can access Mistral AI models via your preferred cloud platforms.

- Self-deployment: You can self-deploy our open-weights models on your own on-premise infrastructure. Our open weights models are available under the Apache 2.0 License, available on Hugging Face or directly from the documentation.

API Access with the La Plateforme

You will need to activate payments on your account to enable your API keys in the La Plateforme. Check out the Quickstart guide to get started with your first Mistral API request.

Explore the capabilities of our models:

Cloud-based deployments

For a comprehensive list of options to deploy and consume Mistral AI models on the cloud, head on to the cloud deployment section.

Raw model weights

Raw model weights can be used in several ways:

- For self-deployment, on cloud or on premise, using either TensorRT-LLM or vLLM, head on to Deployment

- For research, head-on to our reference implementation repository,

- For local deployment on consumer grade hardware, check out the llama.cpp project or Ollama.