MCP

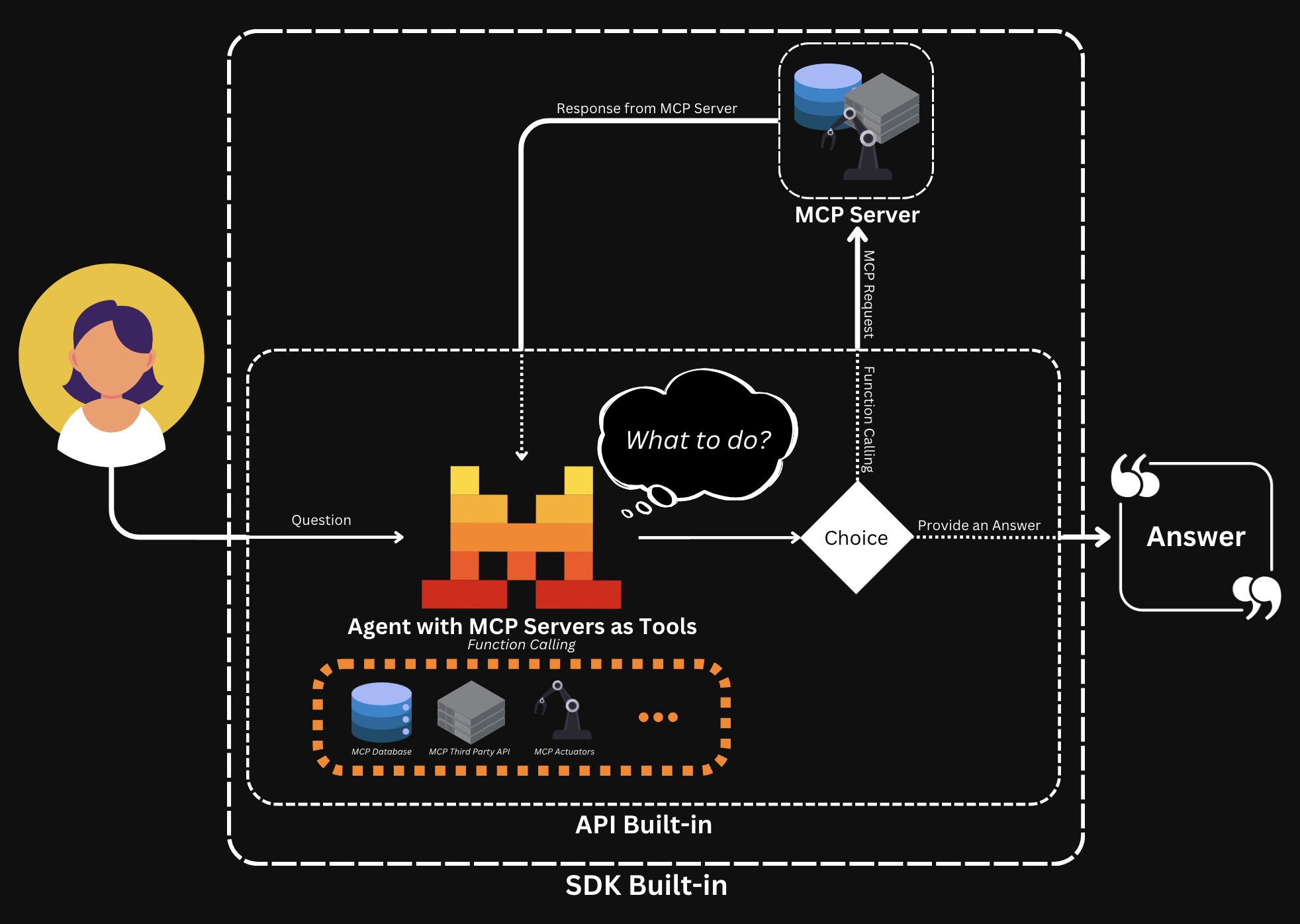

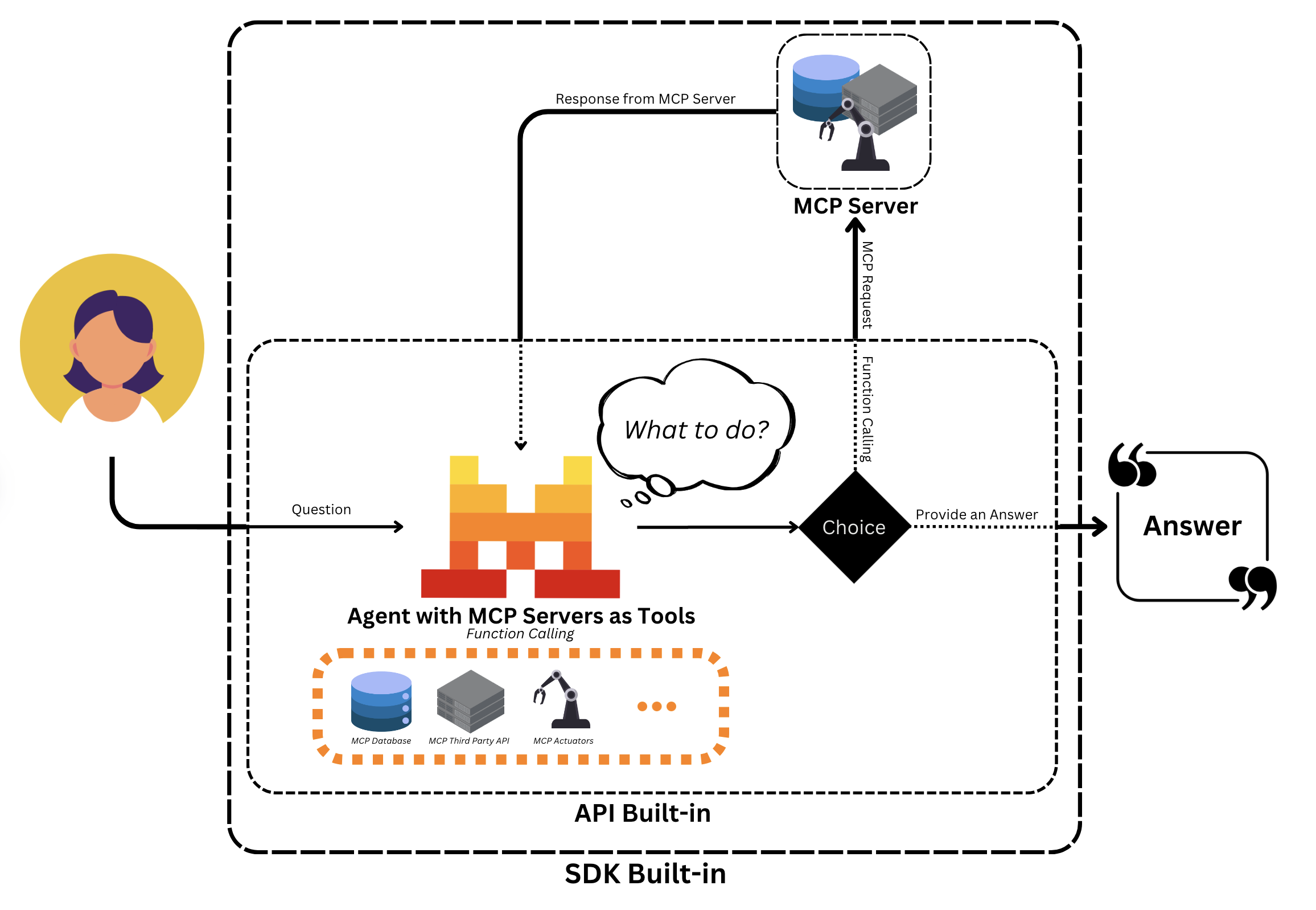

The Model Context Protocol (MCP) is an open standard designed to streamline the integration of AI models with various data sources and tools. By providing a standardized interface, MCP enables seamless and secure connections, allowing AI systems to access and utilize contextual information efficiently. It simplifies the development process, making it easier to build robust and interconnected AI applications.

By replacing fragmented integrations with a single protocol, MCP helps AI models produce better, more relevant responses by connecting them to live data and real-world systems.

For more information on configuring and deploying your own MCP Server, refer to the Model Context Protocol documentation.

MCP Client Usage

Our Python SDK enables seamless integration of our agents with MCP Clients.

MCP Setup

Below you can find examples of how to set up and use the MCP Client with our Python SDK.

Here is how to create an agent that uses a local MCP server to fetch weather information based on a user's location, combining MCP integration.

Step 1: Initialize the Mistral Client

First, we import everything needed. Most of the required modules are available with our mistralai package, but you will also need mcp. All the MCP Clients will be run asynchronously, so we will create an async main function where the main code will reside.

#!/usr/bin/env python

import asyncio

import os

from mistralai import Mistral

from mistralai.extra.run.context import RunContext

from mcp import StdioServerParameters

from mistralai.extra.mcp.stdio import MCPClientSTDIO

from pathlib import Path

from mistralai.types import BaseModel

# Set the current working directory and model to use

cwd = Path(__file__).parent

MODEL = "mistral-medium-latest"

async def main() -> None:

# Initialize the Mistral client with your API key

api_key = os.environ["MISTRAL_API_KEY"]

client = Mistral(api_key)Step 2: Define Server Parameters and Create an Agent

We can now define the server parameters, which will point to a specific path. For more information, we recommend visiting the Model Context Protocol documentation. Once the server is defined, we can create our agent.

# Define parameters for the local MCP server

server_params = StdioServerParameters(

command="python",

args=[str((cwd / "mcp_servers/stdio_server.py").resolve())],

env=None,

)

# Create an agent to tell the weather

weather_agent = client.beta.agents.create(

model=MODEL,

name="weather teller",

instructions="You are able to tell the weather.",

description="",

)Step 3: Define Output Format and Create a Run Context

The next step is to create a Run Context where everything will happen between the MCP Client and our Agent. You can also leverage structured outputs!

# Define the expected output format for weather results

class WeatherResult(BaseModel):

user: str

location: str

temperature: float

# Create a run context for the agent

async with RunContext(

agent_id=weather_agent.id,

output_format=WeatherResult,

continue_on_fn_error=True,

) as run_ctx:Step 4: Register MCP Client

The next step is to create and register the MCP Client.

# Create and register an MCP client with the run context

mcp_client = MCPClientSTDIO(stdio_params=server_params)

await run_ctx.register_mcp_client(mcp_client=mcp_client)You can also leverage the MCP Orchestration to use Function Calling locally directly.

import random

# Register a function to get a random location for a user, it will be an available tool

@run_ctx.register_func

def get_location(name: str) -> str:

"""Function to get location of a user.

Args:

name: name of the user.

"""

return random.choice(["New York", "London", "Paris", "Tokyo", "Sydney"])

# Create and register an MCP client with the run context

mcp_client = MCPClientSTDIO(stdio_params=server_params)

await run_ctx.register_mcp_client(mcp_client=mcp_client)Step 5: Run the Agent and Print Results

Everything is ready; you can run our Agent and get the output results!

# Run the agent with a query

run_result = await client.beta.conversations.run_async(

run_ctx=run_ctx,

inputs="Tell me the weather in John's location currently.",

)

# Print the results

print("All run entries:")

for entry in run_result.output_entries:

print(f"{entry}")

print()

print(f"Final model: {run_result.output_as_model}")

if __name__ == "__main__":

asyncio.run(main())Streaming Conversations

Streaming conversations with an agent using a local MCP server is similar to non-streaming, but instead of waiting for the entire response, you process the results as they arrive.

Here is a brief example of how to stream conversations:

# Stream the agent's responses

events = await client.beta.conversations.run_stream_async(

run_ctx=run_ctx,

inputs="Tell me the weather in John's location currently.",

)

# Process the streamed events

run_result = None

async for event in events:

if isinstance(event, RunResult):

run_result = event

else:

print(event)

if not run_result:

raise RuntimeError("No run result found")

# Print the results

print("All run entries:")

for entry in run_result.output_entries:

print(f"{entry}")

print(f"Final model: {run_result.output_as_model}")