Image Generation

Image Generation is a built-in tool tool that enables agents to generate images of all kinds and forms.

Enabling this tool allows models to create images at any given moment.

To use the image generation tool, you can create an agent with the image generation tool enabled and leverage the conversations API to generate images, note that you need to download the image from the file ID provided in the response.

Create an Image Generation Agent

You can create an agent with access to image generation by providing it as one of the tools. Note that you can still add more tools to the agent. The model is free to create images on demand.

image_agent = client.beta.agents.create(

model="mistral-medium-2505",

name="Image Generation Agent",

description="Agent used to generate images.",

instructions="Use the image generation tool when you have to create images.",

tools=[{"type": "image_generation"}],

completion_args={

"temperature": 0.3,

"top_p": 0.95,

}

)As with other agents, when creating one, you will receive an agent ID corresponding to the created agent. You can use this ID to start a conversation.

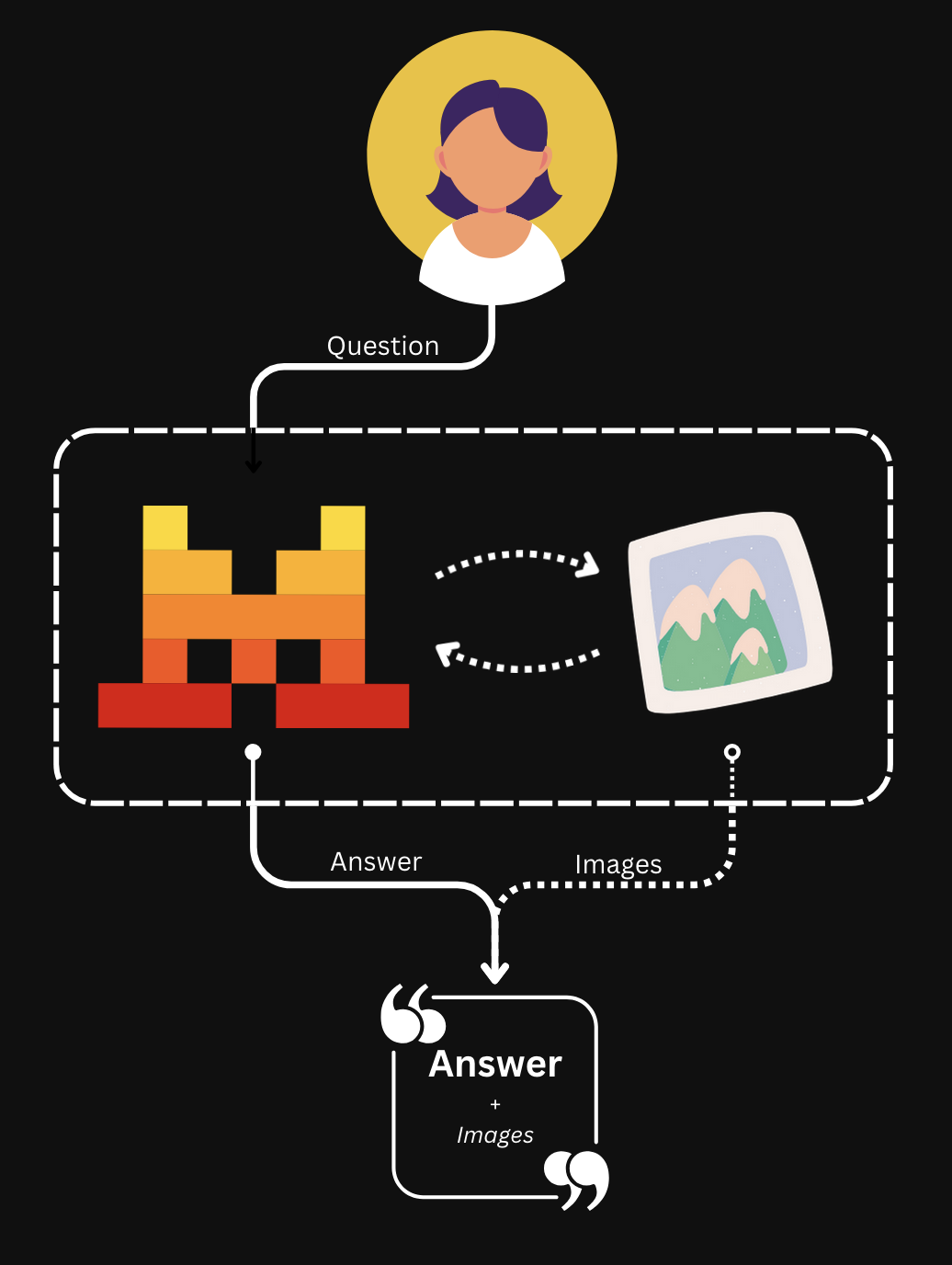

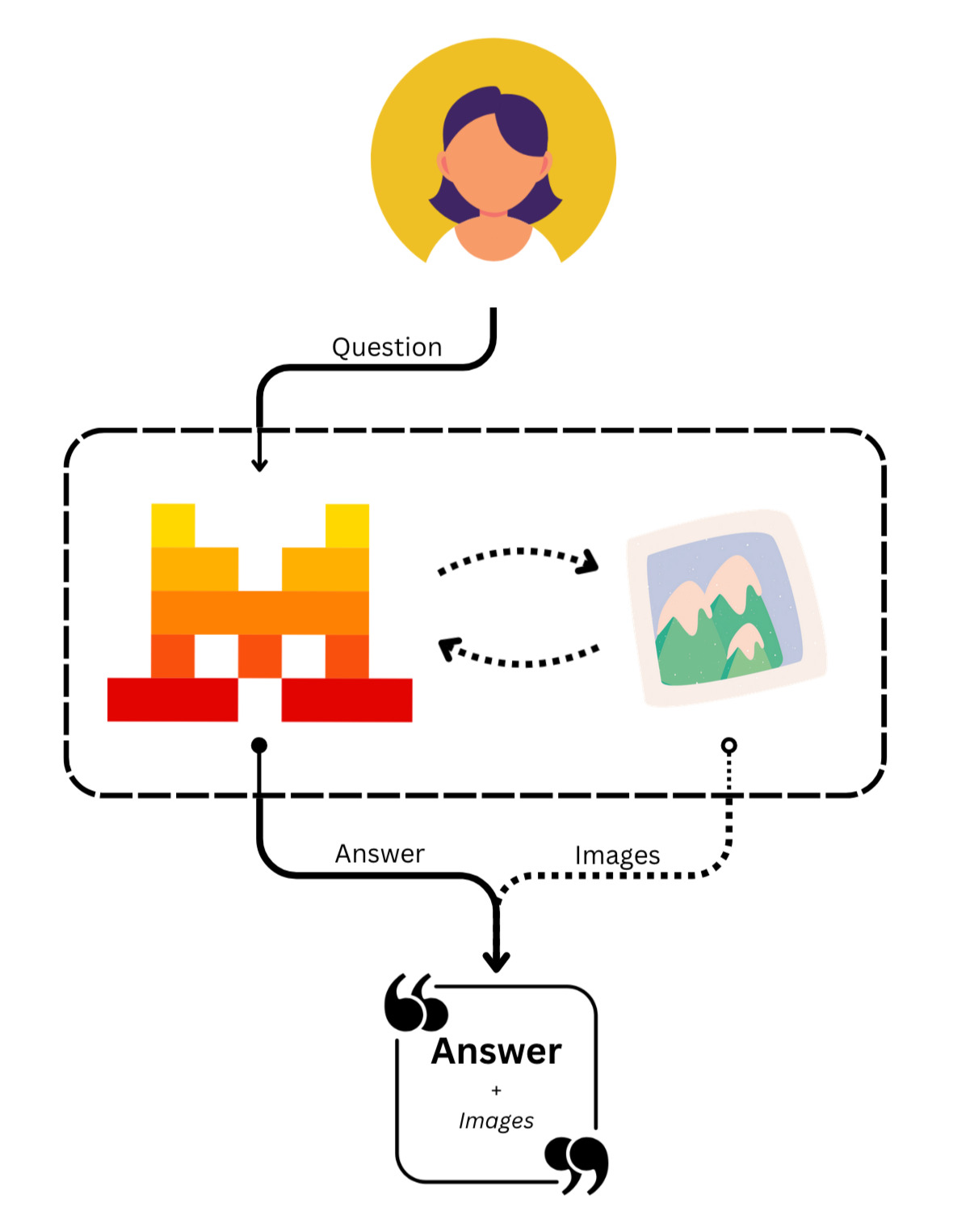

How it Works

Now that we have our image generation agent ready, we can create images on demand at any point.

Conversations with Image Generation

To start a conversation with our image generation agent, we can use the following code:

response = client.beta.conversations.start(

agent_id=image_agent.id,

inputs="Generate an orange cat in an office."

)Explanation of the Outputs

Below we will explain the different outputs of the response of the previous snippet example:

-

tool.execution: This entry corresponds to the execution of the image generation tool. It includes metadata about the execution, such as:name: The name of the tool, which in this case isimage_generation.object: The type of object, which isentry.type: The type of entry, which istool.execution.created_atandcompleted_at: Timestamps indicating when the tool execution started and finished.id: A unique identifier for the tool execution.

-

message.output: This entry corresponds to the generated answer from our agent. It includes metadata about the message, such as:content: The actual content of the message, which in this case is a list of chunks. These chunks can be of different types, and the model can interleave different chunks, usingtextchunks and others to complete the message. In this case, we got a two chunks corresponding to atextchunk and atool_file, which represents the generated file, specifically the generated image. Thecontentsection includes:tool: The name of the tool used for generating the file, which in this case isimage_generation.file_id: A unique identifier for the generated file.type: The type of chunk, which in this case istool_file.file_name: The name of the generated file.file_type: The type of the generated file, which in this case ispng.

object: The type of object, which isentry.type: The type of entry, which ismessage.output.created_atandcompleted_at: Timestamps indicating when the message was created and completed.id: A unique identifier for the message.agent_id: A unique identifier for the agent that generated the message.model: The model used to generate the message, which in this case ismistral-medium-2505.role: The role of the message, which isassistant.

Download Images

To access that image you can download it via our files endpoint.

# Download using the ToolFileChunk ID

file_bytes = client.files.download(file_id=file_chunk.file_id).read()

# Save the file locally

with open(f"image_generated.png", "wb") as file:

file.write(file_bytes)

A full code snippet to download all generated images from a response could look like so:

from mistralai.models import ToolFileChunk

for i, chunk in enumerate(response.outputs[-1].content):

# Check if chunk corresponds to a ToolFileChunk

if isinstance(chunk, ToolFileChunk):

# Download using the ToolFileChunk ID

file_bytes = client.files.download(file_id=chunk.file_id).read()

# Save the file locally

with open(f"image_generated_{i}.png", "wb") as file:

file.write(file_bytes)